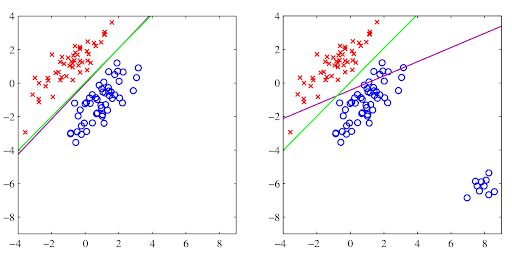

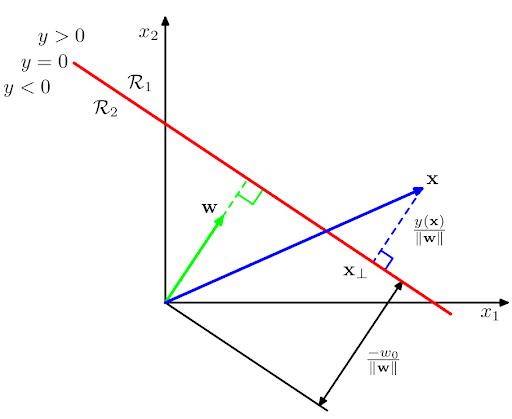

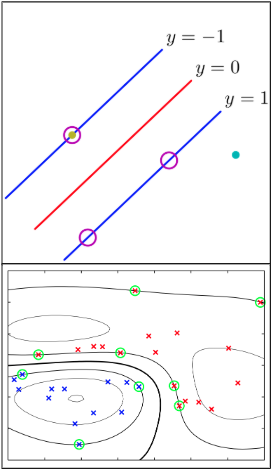

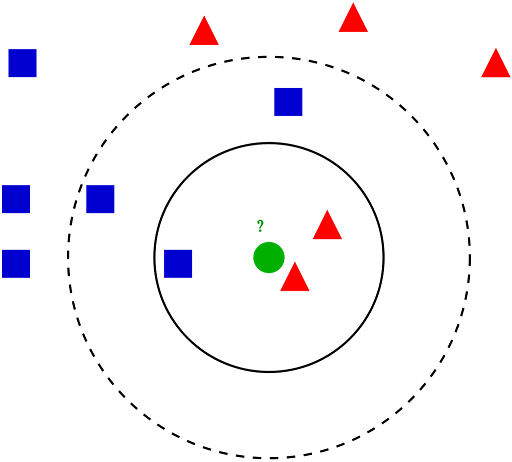

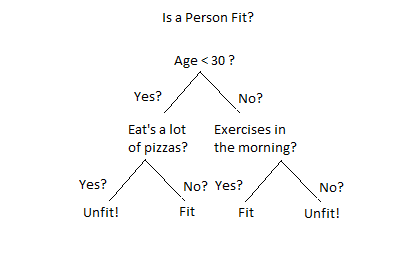

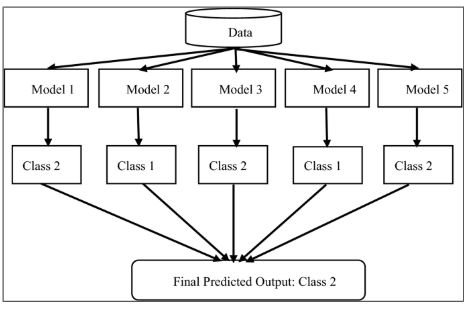

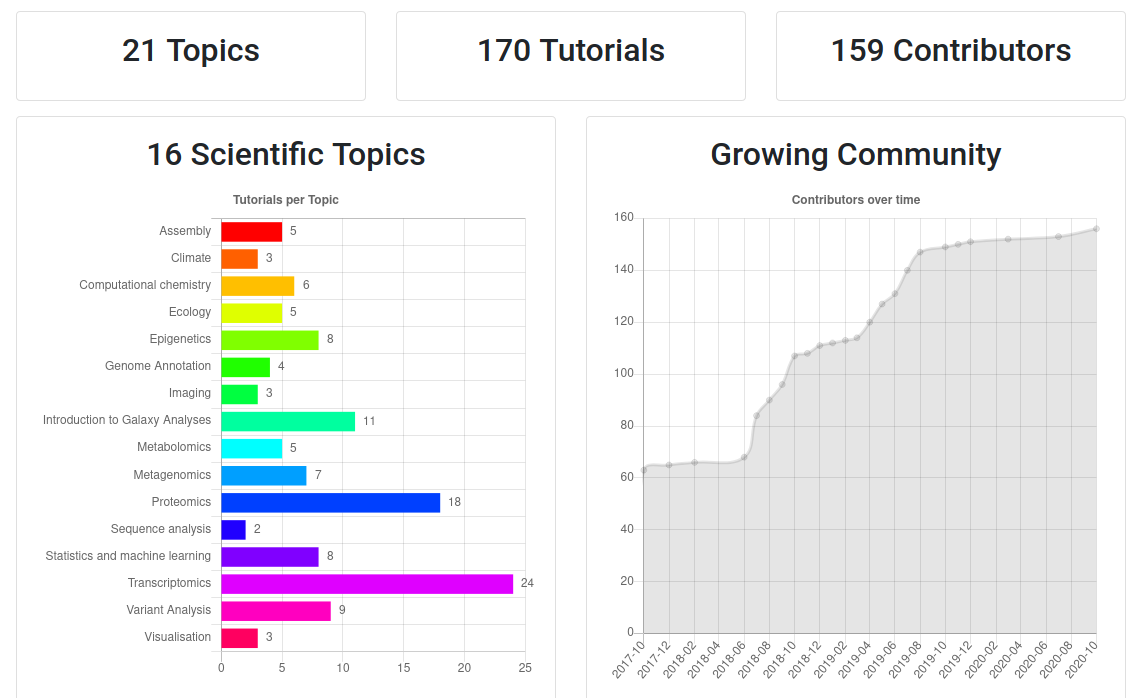

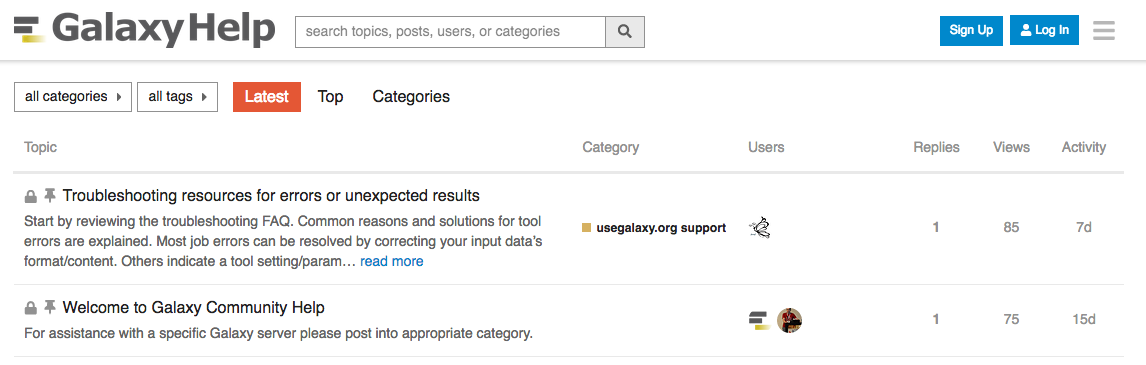

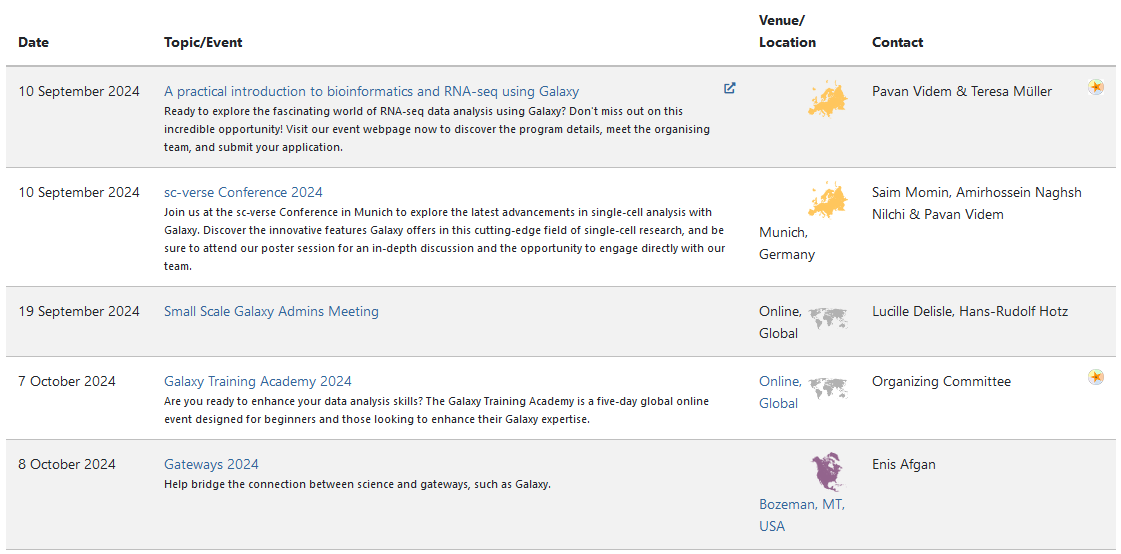

name: inverse layout: true class: center, middle, inverse <div class="my-header"><span> <a href="/training-material/topics/statistics" title="Return to topic page" ><i class="fa fa-level-up" aria-hidden="true"></i></a> <a href="https://github.com/galaxyproject/training-material/edit/main/topics/statistics/tutorials/classification_machinelearning/slides.html"><i class="fa fa-pencil" aria-hidden="true"></i></a> </span></div> <div class="my-footer"><span> <img src="/training-material/assets/images/GTN-60px.png" alt="Galaxy Training Network" style="height: 40px;"/> </span></div> --- <img src="/training-material/assets/images/GTNLogo1000.png" alt="Galaxy Training Network" class="cover-logo"/> <br/> <br/> # Classification in Machine Learning <br/> <br/> <div markdown="0"> <div class="contributors-line"> <ul class="text-list"> <li> <a href="/training-material/hall-of-fame/anuprulez/" class="contributor-badge contributor-anuprulez"><img src="https://avatars.githubusercontent.com/anuprulez?s=36" alt="Anup Kumar avatar" width="36" class="avatar" /> Anup Kumar</a></li> </ul> </div> </div> <!-- modified date --> <div class="footnote" style="bottom: 8em;"> <i class="far fa-calendar" aria-hidden="true"></i><span class="visually-hidden">last_modification</span> Updated: <i class="fas fa-fingerprint" aria-hidden="true"></i><span class="visually-hidden">purl</span><abbr title="Persistent URL">PURL</abbr>: <a href="https://gxy.io/GTN:S00134">gxy.io/GTN:S00134</a> </div> <!-- other slide formats (video and plain-text) --> <div class="footnote" style="bottom: 5em;"> <i class="fas fa-file-alt" aria-hidden="true"></i><span class="visually-hidden">text-document</span><a href="slides-plain.html"> Plain-text slides</a> | </div> <!-- usage tips --> <div class="footnote" style="bottom: 2em;"> <strong>Tip: </strong>press <kbd>P</kbd> to view the presenter notes | <i class="fa fa-arrows" aria-hidden="true"></i><span class="visually-hidden">arrow-keys</span> Use arrow keys to move between slides </div> ??? Presenter notes contain extra information which might be useful if you intend to use these slides for teaching. Press `P` again to switch presenter notes off Press `C` to create a new window where the same presentation will be displayed. This window is linked to the main window. Changing slides on one will cause the slide to change on the other. Useful when presenting. --- ## Requirements Before diving into this slide deck, we recommend you to have a look at: - [Statistics and machine learning](/training-material/topics/statistics) - Basics of machine learning: [<i class="fab fa-slideshare" aria-hidden="true"></i><span class="visually-hidden">slides</span> slides](/training-material/topics/statistics/tutorials/machinelearning/slides.html) - [<i class="fas fa-laptop" aria-hidden="true"></i><span class="visually-hidden">tutorial</span> hands-on](/training-material/topics/statistics/tutorials/machinelearning/tutorial.html) --- ### <i class="far fa-question-circle" aria-hidden="true"></i><span class="visually-hidden">question</span> Questions - What is classification and how we can use classification techniques? --- ### <i class="fas fa-bullseye" aria-hidden="true"></i><span class="visually-hidden">objectives</span> Objectives - Learn classification background - Learn what a quantitative structure-analysis relationship (QSAR) model is and how it can be constructed in Galaxy - Learn to apply logistic regression, k-nearest neighbors, support verctor machines, random forests and bagging algorithms - Learn how visualizations can be used to analyze the classification results --- # What is classification in machine learning? ??? What is classification in machine learning? --- # Classification .pull-left[ - Class is a category - Represented as an integer (0, 1, 2, 3 ...) - Known as categorical variables - "Supervision" of classes - Two class (binary) classification - Cancer or no cancer, spam or no spam - Multi-class classification - Handwritten digit recognition ] .pull-right[ ] <!-- https://pixy.org/3013900/ CC0 license--> --- # Linear model .pull-left[ - Input data point x (x1, x2) - Weights w (w0, w1, w2) - w0 is intercept - w1, w2 are feature coefficients - Function “y” is learned by a linear model - Decision boundary: y(x) = 0 - If y(x) > 0, x is assigned to class C1 - If y(x) < 0, x is assigned to class C2 ] .pull-right[ ] --- # Support vector machines .pull-left[ - Linear and non-linear variants - Learn decision boundary with maximum margin - Support vectors are data points that lie closest to the decision boundary - Need only support vectors for classifying new samples - Other data points can be thrown away ] .pull-right[ ] --- # Nearest neighbour .pull-left[ - Find a certain number of neighbours - Neighbours are closest based on a distance metric - Distance metrics - Euclidean distance, Manhattan distance, ... - Class of a new sample is the class of the maximum number of its neighbours - Example - K-nearest neighbours - K is the predefined number of neighbours - Stores training data - Unsuitable for large datasets - Learn even irregular boundaries ] .pull-right[ ] --- # Decision tree .pull-left[ - Learn decision rules from features - Split at each node (non-leaf) using feature values - Contain labels at leaf nodes - Advantages: - Easy to understand - Can be used with categorical and numerical data - Prediction is logarithmic in the number of data points - Disadvantages - Overfitting - High variance ] .pull-right[ ] --- # Ensemble method .pull-left[ - Combine multiple tree estimators - Approaches - Bagging and boosting - Bagging - Average prediction of several estimators trained independent of each other - Parallel execution - Examples - Bagging, Random forest - Boosting - Improve estimators sequentially - No parallel execution - Examples - Adaboost, Gradient tree boosting ] .pull-right[ ] --- # References - Book: Pattern Recognition and Machine Learning, Author: Christopher Bishop, 2006, Springer - Nearest neighbours - https://en.wikipedia.org/wiki/K-nearest_neighbors_algorithm - Decision tree - https://scikit-learn.org/stable/modules/tree.html#tree - Ensemble - https://scikit-learn.org/stable/modules/ensemble.html --- # For additional references, please see tutorial's References section --- - Galaxy Training Materials ([training.galaxyproject.org](https://training.galaxyproject.org))  ??? - If you would like to learn more about Galaxy, there are a large number of tutorials available. - These tutorials cover a wide range of scientific domains. --- # Getting Help - **Help Forum** ([help.galaxyproject.org](https://help.galaxyproject.org))  - **Gitter Chat** - [Main Chat](https://gitter.im/galaxyproject/Lobby) - [Galaxy Training Chat](https://gitter.im/Galaxy-Training-Network/Lobby) - Many more channels (scientific domains, developers, admins) ??? - If you get stuck, there are ways to get help. - You can ask your questions on the help forum. - Or you can chat with the community on Gitter. --- # Join an event - Many Galaxy events across the globe - Event Horizon: [galaxyproject.org/events](https://galaxyproject.org/events)  ??? - There are frequent Galaxy events all around the world. - You can find upcoming events on the Galaxy Event Horizon. --- ### <i class="fas fa-key" aria-hidden="true"></i><span class="visually-hidden">keypoints</span> Key points - Classification is a supervised approach in machine learning. - For classification tasks, data is divided into training and test sets. - Using classification, the samples are learned using the training set and predicted using the test set. - For each classification algorithm, the parameters should be optimised based on the dataset. - Classification algorithms can be applied to, for example, chemical datasets to predict important properties. --- ## Thank You! This material is the result of a collaborative work. Thanks to the [Galaxy Training Network](https://training.galaxyproject.org) and all the contributors! <div markdown="0"> <div class="contributors-line"> <table class="contributions"> <tr> <td><abbr title="These people wrote the bulk of the tutorial, they may have done the analysis, built the workflow, and wrote the text themselves.">Author(s)</abbr></td> <td> <a href="/training-material/hall-of-fame/anuprulez/" class="contributor-badge contributor-anuprulez"><img src="https://avatars.githubusercontent.com/anuprulez?s=36" alt="Anup Kumar avatar" width="36" class="avatar" /> Anup Kumar</a> </td> </tr> <tr class="reviewers"> <td><abbr title="These people reviewed this material for accuracy and correctness">Reviewers</abbr></td> <td> <a href="/training-material/hall-of-fame/bgruening/" class="contributor-badge contributor-badge-small contributor-bgruening"><img src="https://avatars.githubusercontent.com/bgruening?s=36" alt="Björn Grüning avatar" width="36" class="avatar" /></a><a href="/training-material/hall-of-fame/teresa-m/" class="contributor-badge contributor-badge-small contributor-teresa-m"><img src="https://avatars.githubusercontent.com/teresa-m?s=36" alt="Teresa Müller avatar" width="36" class="avatar" /></a><a href="/training-material/hall-of-fame/martenson/" class="contributor-badge contributor-badge-small contributor-martenson"><img src="https://avatars.githubusercontent.com/martenson?s=36" alt="Martin Čech avatar" width="36" class="avatar" /></a><a href="/training-material/hall-of-fame/dadrasarmin/" class="contributor-badge contributor-badge-small contributor-dadrasarmin"><img src="https://avatars.githubusercontent.com/dadrasarmin?s=36" alt="Armin Dadras avatar" width="36" class="avatar" /></a></td> </tr> </table> </div> </div> <div style="display: flex;flex-direction: row;align-items: center;justify-content: center;"> <img src="/training-material/assets/images/GTNLogo1000.png" alt="Galaxy Training Network" style="height: 100px;"/> </div> Tutorial Content is licensed under <a rel="license" href="http://creativecommons.org/licenses/by/4.0/">Creative Commons Attribution 4.0 International License</a>.<br/>