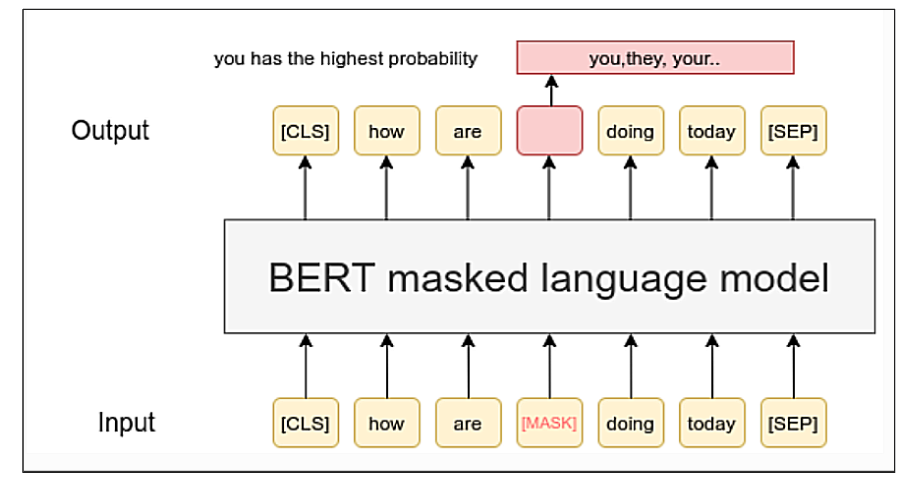

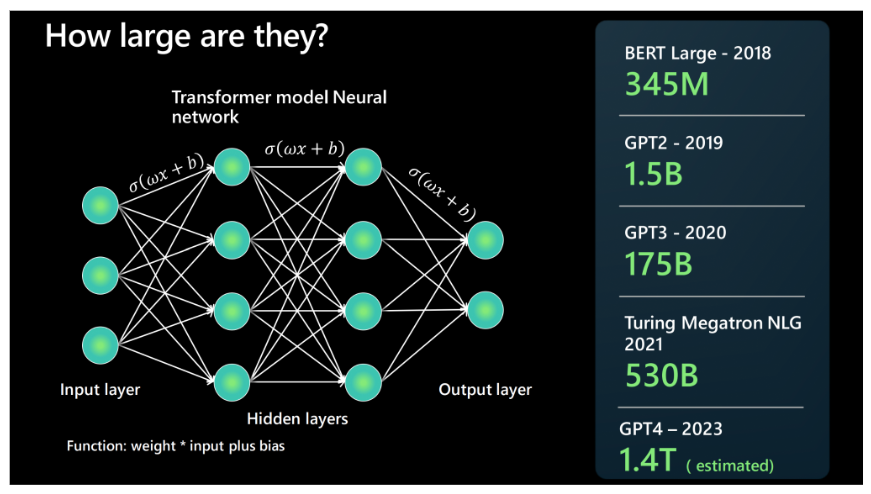

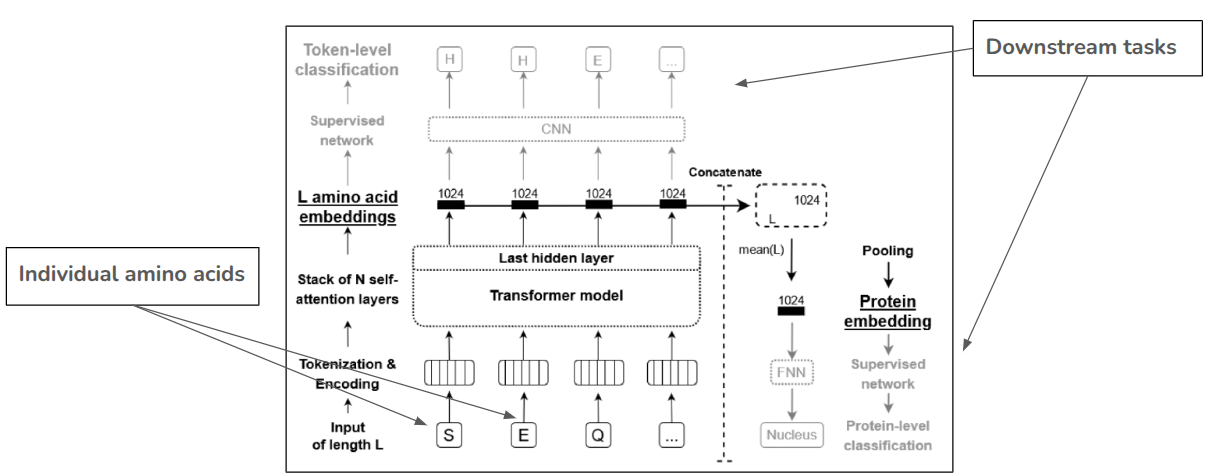

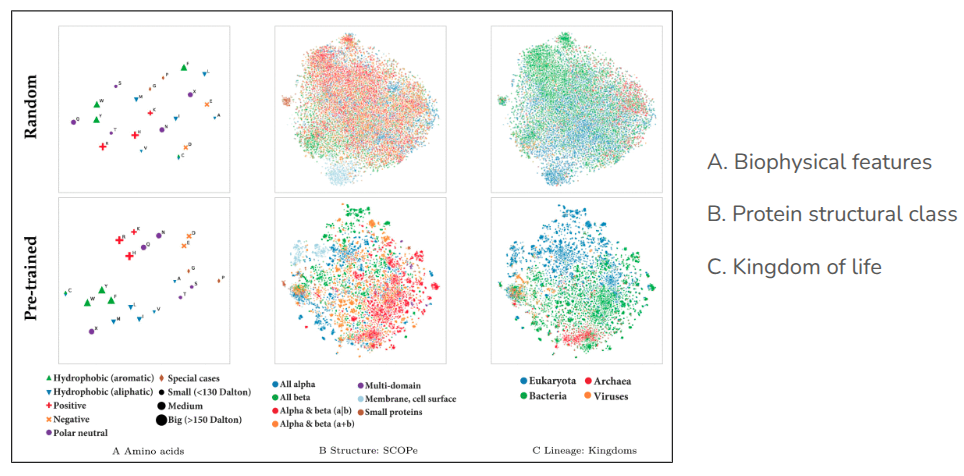

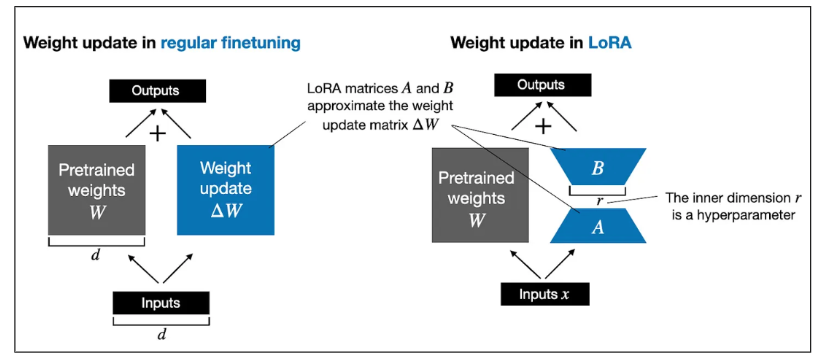

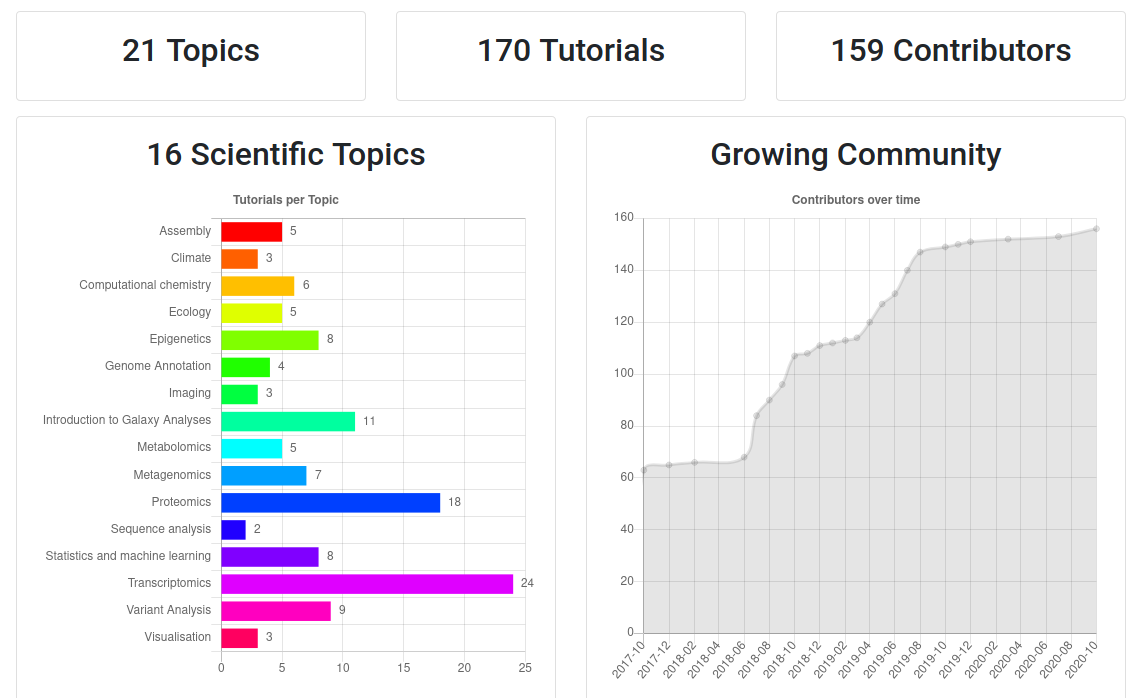

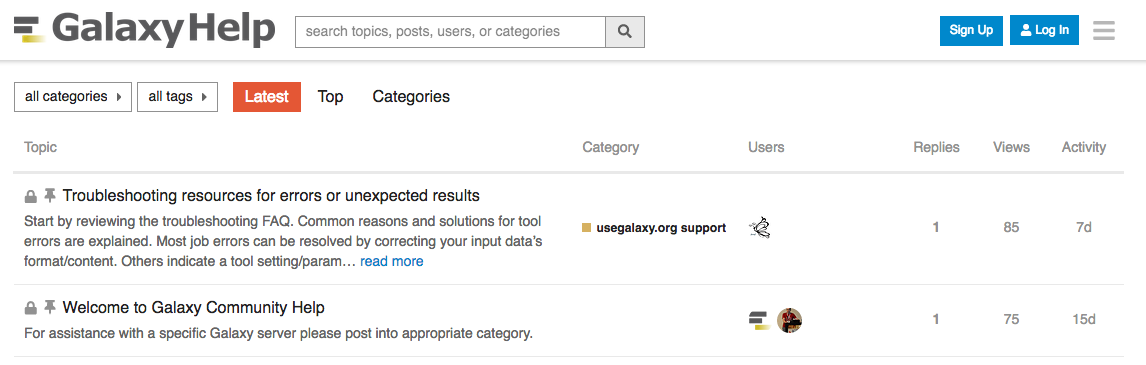

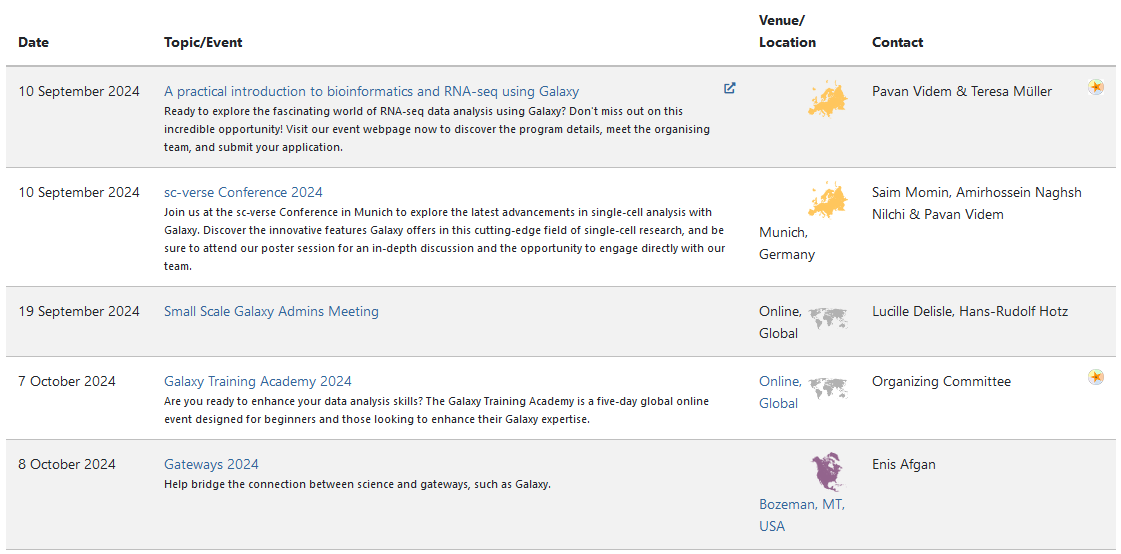

name: inverse layout: true class: center, middle, inverse <div class="my-header"><span> <a href="/training-material/topics/statistics" title="Return to topic page" ><i class="fa fa-level-up" aria-hidden="true"></i></a> <a href="https://github.com/galaxyproject/training-material/edit/main/topics/statistics/tutorials/fine_tuning_protTrans/slides.html"><i class="fa fa-pencil" aria-hidden="true"></i></a> </span></div> <div class="my-footer"><span> <img src="/training-material/assets/images/GTN-60px.png" alt="Galaxy Training Network" style="height: 40px;"/> </span></div> --- <img src="/training-material/assets/images/GTNLogo1000.png" alt="Galaxy Training Network" class="cover-logo"/> <br/> <br/> # Fine-tuning Protein Language Model <br/> <br/> <div markdown="0"> <div class="contributors-line"> <ul class="text-list"> <li> <a href="/training-material/hall-of-fame/anuprulez/" class="contributor-badge contributor-anuprulez"><img src="https://avatars.githubusercontent.com/anuprulez?s=36" alt="Anup Kumar avatar" width="36" class="avatar" /> Anup Kumar</a></li> </ul> </div> </div> <!-- modified date --> <div class="footnote" style="bottom: 8em;"> <i class="far fa-calendar" aria-hidden="true"></i><span class="visually-hidden">last_modification</span> Updated: <i class="fas fa-fingerprint" aria-hidden="true"></i><span class="visually-hidden">purl</span><abbr title="Persistent URL">PURL</abbr>: <a href="https://gxy.io/GTN:S00135">gxy.io/GTN:S00135</a> </div> <!-- other slide formats (video and plain-text) --> <div class="footnote" style="bottom: 5em;"> <i class="fas fa-file-alt" aria-hidden="true"></i><span class="visually-hidden">text-document</span><a href="slides-plain.html"> Plain-text slides</a> | </div> <!-- usage tips --> <div class="footnote" style="bottom: 2em;"> <strong>Tip: </strong>press <kbd>P</kbd> to view the presenter notes | <i class="fa fa-arrows" aria-hidden="true"></i><span class="visually-hidden">arrow-keys</span> Use arrow keys to move between slides </div> ??? Presenter notes contain extra information which might be useful if you intend to use these slides for teaching. Press `P` again to switch presenter notes off Press `C` to create a new window where the same presentation will be displayed. This window is linked to the main window. Changing slides on one will cause the slide to change on the other. Useful when presenting. --- ## Requirements Before diving into this slide deck, we recommend you to have a look at: - [Statistics and machine learning](/training-material/topics/statistics) - Basics of machine learning: [<i class="fab fa-slideshare" aria-hidden="true"></i><span class="visually-hidden">slides</span> slides](/training-material/topics/statistics/tutorials/machinelearning/slides.html) - [<i class="fas fa-laptop" aria-hidden="true"></i><span class="visually-hidden">tutorial</span> hands-on](/training-material/topics/statistics/tutorials/machinelearning/tutorial.html) - Classification in Machine Learning: [<i class="fab fa-slideshare" aria-hidden="true"></i><span class="visually-hidden">slides</span> slides](/training-material/topics/statistics/tutorials/classification_machinelearning/slides.html) - [<i class="fas fa-laptop" aria-hidden="true"></i><span class="visually-hidden">tutorial</span> hands-on](/training-material/topics/statistics/tutorials/classification_machinelearning/tutorial.html) --- ### <i class="far fa-question-circle" aria-hidden="true"></i><span class="visually-hidden">question</span> Questions - How to load large protein AI models? - How to fine-tune such models on downstream tasks such as post-translational site prediction? --- ### <i class="fas fa-bullseye" aria-hidden="true"></i><span class="visually-hidden">objectives</span> Objectives - Learn to load and use large protein models from HuggingFace - Learn to fine-tune them on specific tasks such as predicting dephosphorylation sites --- # Language Models (LM) - Powerful LMs "understand" language like humans - LMs are trained to understand and generate human language - Popular models: GPT-3, Llama2, Gemini, … - Trained on vast datasets with billions of parameters - Self-supervised learning - Masked language modeling - Next word/sentence prediction - Can we train such language models on protein/DNA/RNA sequences? - LM for life sciences - DNABert, ProtBert, **ProtT5**, CodonBert, RNA-FM, ESM/2, BioGPT, … - Many are available on HuggingFace --- # Bidirectional Encoder Representations from Transformers (BERT)  --- # Language Models (LMs)  --- # Protein Language Model (pLM) - Models trained on large protein databases - Big fantastic database (> 2.4 billions sequences), Uniprot, … - Popular architectures such as BERT, Albert, T5, T5-XXL … - Key challenges in training such models - Large number of GPUs needed for training: (Prot)TXL needs > 5000 GPUs - Expertise needed in large scale AI training - Most labs and researchers don't have access such resources - **Solution**: fine-tune pre-trained models on downstream tasks such as protein family classification - **Benefits**: requires smaller data, less expertise, training time and compute resources --- # Architecture: Protein Language Model (pLM)  --- # T-SNE embedding projections  --- # Challenges for downstream tasks - Key tasks - Fine tuning - Embedding extraction - Training challenges - ProtT5: **1.2 Billion** - Longer training time - Training or fine-tuning cannot fit on GPU with 15 GB memory (~26 GB) --- # Low-ranking adaption (LoRA) - Reduce model size - 1.2 Billion to **3.5 Million** parameters - Fits on GPUs with < 15 GB memory  --- # Use-case: Dephosphorylation (Post-translational modification (PTM)) site prediction - PTM: chemical modifications to a protein after systhesis - Crucial for biological processes such as regulating proteins, gene expression, cell cycle, … - Dephosphorylation - Removal of a phosphate group from a molecule - Is less studied and publicly available labeled dataset is small - Hard to train a large deep learning model - Fine-tuning might improve site classification accuracy --- # References - BERT - https://www.sbert.net/examples/unsupervised_learning/MLM/README.html - LLM sizes - https://microsoft.github.io/Workshop-Interact-with-OpenAI-models/llms/ - Compute sizes - https://ieeexplore.ieee.org/mediastore/IEEE/content/media/34/9893033/9477085/elnag.t2-3095381-large.gif - ProtTrans - https://ieeexplore.ieee.org/document/9477085 - LoRA - https://magazine.sebastianraschka.com/p/lora-and-dora-from-scratch - Dephosphorylation - https://www.ncbi.nlm.nih.gov/pmc/articles/PMC8264445/ --- # For additional references, please see tutorial's References section --- - Galaxy Training Materials ([training.galaxyproject.org](https://training.galaxyproject.org))  ??? - If you would like to learn more about Galaxy, there are a large number of tutorials available. - These tutorials cover a wide range of scientific domains. --- # Getting Help - **Help Forum** ([help.galaxyproject.org](https://help.galaxyproject.org))  - **Gitter Chat** - [Main Chat](https://gitter.im/galaxyproject/Lobby) - [Galaxy Training Chat](https://gitter.im/Galaxy-Training-Network/Lobby) - Many more channels (scientific domains, developers, admins) ??? - If you get stuck, there are ways to get help. - You can ask your questions on the help forum. - Or you can chat with the community on Gitter. --- # Join an event - Many Galaxy events across the globe - Event Horizon: [galaxyproject.org/events](https://galaxyproject.org/events)  ??? - There are frequent Galaxy events all around the world. - You can find upcoming events on the Galaxy Event Horizon. --- ### <i class="fas fa-key" aria-hidden="true"></i><span class="visually-hidden">keypoints</span> Key points - Training a very large deep learning model from scratch on a large dataset requires exertise and compute power - Large models such as ProtTrans are trained using millions of protein sequences - They contain significant knowledge about context in protein sequences - These models can be used in multiple ways for learning on a new dataset such as fine tuning, embedding extraction, ... - Fine-tuning using LoRA requires much less time and compute power - Downstream tasks such as protein sequence classification can be performed using these resources --- ## Thank You! This material is the result of a collaborative work. Thanks to the [Galaxy Training Network](https://training.galaxyproject.org) and all the contributors! <div markdown="0"> <div class="contributors-line"> <table class="contributions"> <tr> <td><abbr title="These people wrote the bulk of the tutorial, they may have done the analysis, built the workflow, and wrote the text themselves.">Author(s)</abbr></td> <td> <a href="/training-material/hall-of-fame/anuprulez/" class="contributor-badge contributor-anuprulez"><img src="https://avatars.githubusercontent.com/anuprulez?s=36" alt="Anup Kumar avatar" width="36" class="avatar" /> Anup Kumar</a> </td> </tr> <tr class="reviewers"> <td><abbr title="These people reviewed this material for accuracy and correctness">Reviewers</abbr></td> <td> <a href="/training-material/hall-of-fame/bgruening/" class="contributor-badge contributor-badge-small contributor-bgruening"><img src="https://avatars.githubusercontent.com/bgruening?s=36" alt="Björn Grüning avatar" width="36" class="avatar" /></a><a href="/training-material/hall-of-fame/teresa-m/" class="contributor-badge contributor-badge-small contributor-teresa-m"><img src="https://avatars.githubusercontent.com/teresa-m?s=36" alt="Teresa Müller avatar" width="36" class="avatar" /></a><a href="/training-material/hall-of-fame/martenson/" class="contributor-badge contributor-badge-small contributor-martenson"><img src="https://avatars.githubusercontent.com/martenson?s=36" alt="Martin Čech avatar" width="36" class="avatar" /></a><a href="/training-material/hall-of-fame/dadrasarmin/" class="contributor-badge contributor-badge-small contributor-dadrasarmin"><img src="https://avatars.githubusercontent.com/dadrasarmin?s=36" alt="Armin Dadras avatar" width="36" class="avatar" /></a></td> </tr> </table> </div> </div> <div style="display: flex;flex-direction: row;align-items: center;justify-content: center;"> <img src="/training-material/assets/images/GTNLogo1000.png" alt="Galaxy Training Network" style="height: 100px;"/> </div> Tutorial Content is licensed under <a rel="license" href="http://creativecommons.org/licenses/by/4.0/">Creative Commons Attribution 4.0 International License</a>.<br/>